The Epic Continues…

Last week it was 34 years since the original launch of Mathematica and what’s now the Wolfram Language. And through all those years we’ve energetically continued building further and further, adding ever more capabilities, and steadily extending the domain of the computational paradigm.

In recent years we’ve established something of a rhythm, delivering the fruits of our development efforts roughly twice a year. We released Version 13.0 on December 13, 2021. And now, roughly six months later, we’re releasing Version 13.1. As usual, even though it’s a “.1” release, it’s got a lot of new (and updated) functionality, some of which we’ve worked on for many years but finally now brought to fruition.

For me it’s always exciting to see what we manage to deliver in each new version. And in Version 13.1 we have 90 completely new functions—as well as 203 existing functions with substantial updates. And beyond what appears in specific functions, there’s also major new functionality in Version 13.1 in areas like user interfaces and the compiler.

The Wolfram Language as it exists today encompasses a vast range of functionality. But its great power comes not just from what it contains, but also from how coherently everything in it fits together. And for nearly 36 years I’ve taken it as a personal responsibility to ensure that that coherence is maintained. It’s taken both great focus and lots of deep intellectual work. But as I experience them every day in my use of the Wolfram Language, I’m proud of the results.

And for the past four years I’ve been sharing the “behind the scenes” of how it’s achieved—by livestreaming our Wolfram Language design review meetings. It’s an unprecedented level of openness—and engagement with the community. In designing Version 13.1 we’ve done 90 livestreams—lasting more than 96 hours. And in opening up our process we’re providing visibility not only into what was built for Version 13.1, but also of why it was built, and how decisions about it were made.

But, OK, so what finally is in Version 13.1? Let’s talk about some highlights….

Beyond Listability: Introducing Threaded

From the very beginning of Mathematica and the Wolfram Language we’ve had the concept of listability: if you add two lists, for example, their corresponding elements will be added:

✕

|

It’s a very convenient mechanism, that typically does exactly what you’d want. And for 35 years we haven’t really considered extending it. But if we look at code that gets written, it often happens that there are parts that basically implement something very much like listability, but slightly more general. And in Version 13.1 we have a new symbolic construct, Threaded, that effectively allows you to easily generalize listability.

Consider:

✕

|

This uses ordinary listability, effectively computing:

✕

|

But what if you want instead to “go down a level” and thread {x,y} into the lowest parts of the first list? Well, now you can use Threaded to do that:

✕

|

On its own, Threaded is just a symbolic wrapper:

✕

|

But as soon as it appears in a function—like Plus—that has attribute Listable, it specifies that the listability should be applied after what’s specified inside Threaded is “threaded” at the lowest level.

Here’s another example. Create a list:

✕

|

How should we then multiply each element by {1,–1}? We could do this with:

✕

|

But now we’ve got Threaded, and so instead we can just say:

✕

|

You can give Threaded as an argument to any listable function, not just Plus and Times:

✕

|

You can use Threaded and ordinary listability together:

✕

|

You can have several Threadeds together as well:

✕

|

Threaded, by the way, gets its name from the function Thread, which explicitly does “threading”, as in:

✕

|

By default, Threaded will always thread into the lowest level of a list:

✕

|

✕

|

Here’s a “real-life” example of using Threaded like this. The data in a 3D color image consists of a rank-3 array of triples of RGB values:

✕

|

This multiplies every RGB triple by {0,1,2}:

✕

|

Most of the time you either want to use ordinary listability that operates at the top level of a list, or you want to use the default form of Threaded, that operates at the lowest level of a list. But Threaded has a more general form, in which you can explicitly say what level you want it to operate at.

Here’s the default case:

✕

|

Here’s level 1, which is just like ordinary listability:

✕

|

And here’s threading into level 2:

✕

|

Threaded provides a very convenient way to do all sorts of array-combining operations. There’s additional complexity when the object being “threaded in” itself has multiple levels. The default in this case is to align the lowest level in the thing being threaded in with the lowest level of the thing into which it’s being threaded:

✕

|

Here now is “ordinary listability” behavior:

✕

|

For the arrays we’re looking at here, the default behavior is equivalent to:

✕

|

Sometimes it’s clearer to write this out in a form like

✕

|

which says that the first level of the array inside the Threaded is to be aligned with the second level of the outside array. In general, the default case is equivalent to –1 → –1, specifying that the bottom level of the array inside the Threaded should be aligned with the bottom level of the array outside.

Yet More Language Convenience Functions

In every version of the Wolfram Language we try to add new functions that will make general programs easier to write and easier to read. In Version 13.1 the most important such function is Threaded. But there are quite a few others as well.

First in our collection for Version 13.1 is DeleteElements, which deletes specified elements from a list. It’s like Complement, except that it doesn’t reorder the list (analogous to the way DeleteDuplicates removes duplicate elements, without reordering in the way that Union does):

✕

|

DeleteElements also allows more detailed control of how many copies of an element can be deleted. Here it is up to 2 b’s and 3 c’s:

✕

|

Talking of DeleteDuplicates, another new function in Version 13.1 is DeleteAdjacentDuplicates:

✕

|

We’ve had Union, Intersection and Complement since Version 1.0. In Version 13.1 we’re adding SymmetricDifference: find elements that (in the 2-argument case) are in one list or the other, but not both. For example, what countries are in the G20 or the EU, but not both?

✕

|

Let’s say you have several lists, and you want to know what elements are unique to just one of these lists, and don’t occur in multiple lists. The new UniqueElements tells one.

As an example, this tells us which letters uniquely occur in various alphabets:

✕

|

We’ve had Map and Apply, with short forms /@ and @@, ever since Version 1.0. In Version 4.0 we added @@@ to represent Apply[f,expr,1]. But we never added a separate function to correspond to @@@. And over the years, there’ve been quite a few occasions where I’ve basically wanted, for example, to do something like “Fold[@@@, ...]”. Obviously Fold[Apply[#1,#2,1]&,...] would work. But it feels as if there’s a “missing” named function. Well, in Version 13.1, we added it: MapApply is equivalent to @@@:

✕

|

Another small convenience added in Version 13.1 is SameAs—essentially an operator form of SameQ. Why is such a construct needed? Well, there are always tradeoffs in language design. And back in Version 1.0 we decided to make SameQ work with any number of arguments (so you can test whether a whole sequence of things are the same). But this means that for consistency SameQ[expr] must always return True—so it’s not available as an operator of SameQ. And that’s why now in Version 13.1 we’re adding SameAs, that joins the family of operator-form functions like EqualTo and GreaterThan:

✕

|

Procedural programming—often with “variables hanging out”—isn’t the preferred style for most Wolfram Language code. But sometimes it’s the most convenient way to do things. And in Version 13.1 we’ve add a small piece of streamlining by introducing the function Until. Ever since Version 1.0 we’ve had While[test,body] which repeatedly evaluates body while test is True. But if test isn’t True even at first, While won’t ever evaluate body. Until[test,body] does things the other way around: it evaluates body until test becomes True. So if test isn’t True at first, Until will still evaluate body once, in effect only looking at the test after it’s evaluated the body.

Last but not least in the list of new core language functions in Version 13.1 is ReplaceAt. Replace attempts to apply a replacement rule to a complete expression—or a whole level in an expression. ReplaceAll (/.) does the same thing for all subparts of an expression. But quite often one wants more control over where replacements are done. And that’s what ReplaceAt provides:

✕

|

An important feature is that it also has an operator form:

✕

|

Why is this important? The answer is that it gives a symbolic way to specify not just what replacement is made, but also where it is made. And for example this is what’s needed in specifying steps in proofs, say as generated by FindEquationalProof.

Emojis! And More Multilingual Support

What is a character? Back when Version 1.0 was released, characters were represented as 8-bit objects: usually ASCII, but you could pick another “character encoding” (hence the ChararacterEncoding option) if you wanted. Then in the early 1990s came Unicode—which we were one of the very first companies to support. Now “characters” could be 16-bit constructs, with nearly 65,536 possible “glyphs” allocated across different languages and uses (including some mathematical symbols that we introduced). Back in the early 1990s Unicode was a newfangled thing, that operating systems didn’t yet have built-in support for. But we were betting on Unicode, and so we built our own infrastructure for handling it.

Thirty years later Unicode is indeed the universal standard for representing character-like things. But somewhere along the way, it turned out the world needed more than 16 bits’ worth of character-like things. At first it was about supporting variants and historical writing systems (think: cuneiform or Linear B). But then came emoji. And it became clear that—yes, arguably in a return to the Egyptian hieroglyph style of communication—there was an almost infinite number of possible pictorial emoji that could be made, each of them being encoded as their own Unicode code point.

It’s been a slow expansion. Original 16-bit Unicode is “plane 0”. Now there are up to 16 additional planes. Not quite 32-bit characters, but given the way computers work, the approach now is to allow characters to be represented by 32-bit objects. It’s far from trivial to do that uniformly and efficiently. And for us it’s been a long process to upgrade everything in our system—from string manipulation to notebook rendering—to handle full 32-bit characters. And that’s finally been achieved in Version 13.1.

But that’s far from all. In English we’re pretty much used to being able to treat text as a sequence of letters and other characters, with each character being separate. Things get a bit more complicated when you start to worry about diphthongs like æ. But if there are fairly few of these, it works to just introduce them as individual “Unicode characters” with their own code point. But there are plenty of languages—like Hindi or Khmer—where what appears in text like an individual character is really a composite of letter-like constructs, diacritical marks and other things. Such composite characters are normally represented as “grapheme clusters”: runs of Unicode code points. The rules for handling these things can be quite complicated. But after many years of development, major operating systems now successfully do it in most cases. And in Version 13.1 we’re able to make use of this to support such constructs in notebooks.

OK, so what does 32-bit Unicode look like? Using CharacterRange (or FromCharacterCode) we can dive in and just see what’s out there in “character space”. Here’s part of ordinary 16-bit Unicode space:

✕

|

Here’s some of what happens in “plane-1” above character code 65535, in this case catering to “legacy computations”:

✕

|

Plane-0 (below 65535) is pretty much all full. Above that, things are sparser. But around 128000, for example, there are lots of emoji:

✕

|

You can use these in the Wolfram Language, and in notebooks, just like any other characters. So, for example, you can have wolf and ram variables:

✕

|

The 🐏 sorts before the 🐺 because it happens to have a numerically smaller character code:

✕

|

In a notebook, you can enter emoji (and other Unicode characters) using standard operating system tools—like ctrlcmdspace on macOS:

✕

|

The world of emoji is rapidly evolving—and that can sometimes lead to problems. Here’s an emoji range that includes some very familiar emoji, but on at least one of my computer systems also includes emoji that display only as ![]() :

:

✕

|

The reason that happens is that my default fonts don’t contain glyphs for those emoji. But all is not lost. In Version 13.1 we’re including a font from Twitter that aims to contain glyphs for pretty much all emoji:

✕

|

Beyond dealing with individual Unicode characters, there’s also the matter of composites, and grapheme clusters. In Hindi, for example, two characters can combine into something that’s rendered (and treated) as one:

✕

|

The first character here can stand on its own:

✕

|

But the second one is basically a modifier that extends the first character (in this particular case adding a vowel sound):

✕

|

But once the composite हि has been formed it acts “textually” just like a single character, in the sense that, for example, the cursor moves through it in one step. When it appears “computationally” in a string, however, it can still be broken into its constituent Unicode elements:

✕

|

This kind of setup can be used not only for a language like Hindi but also for European languages that have diacritical marks like umlauts:

✕

|

Even though this looks like one character—and in Version 13.1 it’s treated like that for “textual” purposes, for example in notebooks—it is ultimately made up of two distinct “Unicode characters”:

✕

|

In this particular case, though, this can be “normalized” to a single character:

✕

|

It looks the same, but now it really is just one character:

✕

|

Here’s a “combined character” that you can form

✕

|

but for which there’s no single character to which it normalizes:

✕

|

The concept of composite characters applies not only to ordinary text, but also to emojis. For example, take the emoji for a woman

✕

|

together with the emoji for a microscope

✕

|

and combine them with the “zero-width-joiner” character (which, needless to say, doesn’t display as anything)

✕

|

and you get (yes, somewhat bizarrely) a woman scientist!

✕

|

Needless to say, you can do this computationally—though the “calculus” of what’s been defined so far in Unicode is fairly bizarre:

✕

|

I’m sort of hoping that the future of semantics doesn’t end up being defined by the way emojis combine 😎.

As one last—arguably hacky—example of combining characters, Unicode defines various “two-letter” combinations to be flags. Type ![]() then

then ![]() , and you get 🇺🇸!

, and you get 🇺🇸!

Once again, this can be made computational:

✕

|

(And, yes, it’s an interesting question what renders here, and what doesn’t. In some operating systems, no flags are rendered, and we have to pull in a special font to do it.)

A Toolbar for Every Notebook

|

✕

|

It used to be that the only “special key sequence” one absolutely should know in order to use Wolfram Notebooks was shiftenter. But gradually there have started to be more and more high-profile operations that are conveniently done by “pressing a button”. And rather than expecting people to remember all those special key sequences (or think to look in menus for them) we’ve decided to introduce a toolbar that will be displayed by default in every standard notebook. Version 13.1 has the first iteration of this toolbar. Subsequent versions will support an increasing range of capabilities.

It’s not been easy to design the default toolbar (and we hope you’ll like what we came up with!) The main problem is that Wolfram Notebooks are very general, and there are a great many things you can do with them—which it’s challenging to organize into a manageable toolbar. (Some special types of notebooks have had their own specialized toolbars for a while, which were easier to design by virtue of their specialization.)

So what’s in the toolbar? On the left are a couple of evaluation controls:

![]() means “Evaluate”, and is simply equivalent to pressing shiftret (as its tooltip says).

means “Evaluate”, and is simply equivalent to pressing shiftret (as its tooltip says). ![]() means “Abort”, and will stop a computation. To the right of

means “Abort”, and will stop a computation. To the right of ![]() is the menu shown above. The first part of the menu allows you to choose what will be evaluated. (Don’t forget the extremely useful “Evaluate In Place” that lets you evaluate whatever code you have selected—say to turn RGBColor[1,0,0] in your input into

is the menu shown above. The first part of the menu allows you to choose what will be evaluated. (Don’t forget the extremely useful “Evaluate In Place” that lets you evaluate whatever code you have selected—say to turn RGBColor[1,0,0] in your input into ![]() .) The bottom part of the menu gives a couple of more detailed (but highly useful) evaluation controls.

.) The bottom part of the menu gives a couple of more detailed (but highly useful) evaluation controls.

Moving along the toolbar, we next have:

|

✕

|

If your cursor isn’t already in a cell, the pulldown allows you to select what type of cell you want to insert (it’s similar to the ![]() “tongue” that appears within the notebook). (If your cursor is already inside a cell, then like in a typical word processor, the pulldown will tell you the style that’s being used, and let you reset it.)

“tongue” that appears within the notebook). (If your cursor is already inside a cell, then like in a typical word processor, the pulldown will tell you the style that’s being used, and let you reset it.)

![]() gives you a little panel to control to appearance of cells, changing their background colors, frames, dingbats, etc.

gives you a little panel to control to appearance of cells, changing their background colors, frames, dingbats, etc.

Next come cell-related buttons: ![]() . The first is for cell structure and grouping:

. The first is for cell structure and grouping:

✕

|

![]() copies input from above (cmdL). It’s an operation that I, for one, end up doing all the time. I’ll have an input that I evaluate. Then I’ll want to make a modified version of the input to evaluate again, while keeping the original. So I’ll copy the input from above, edit the copy, and evaluate it again.

copies input from above (cmdL). It’s an operation that I, for one, end up doing all the time. I’ll have an input that I evaluate. Then I’ll want to make a modified version of the input to evaluate again, while keeping the original. So I’ll copy the input from above, edit the copy, and evaluate it again.

![]() copies output from above. I don’t find this quite as useful as copy input from above, but it can be helpful if you want to edit output for subsequent input, while leaving the “actual output” unchanged.

copies output from above. I don’t find this quite as useful as copy input from above, but it can be helpful if you want to edit output for subsequent input, while leaving the “actual output” unchanged.

The ![]() block is all about content in cells.

block is all about content in cells. ![]() (which you’ll often press repeatedly) is for extending a selection—in effect going ever upwards in an expression tree. (You can get the same effect by pressing ctrl. or by multiclicking, but it’s a lot more convenient to repeatedly press a single button than to have to precisely time your multiclicks.)

(which you’ll often press repeatedly) is for extending a selection—in effect going ever upwards in an expression tree. (You can get the same effect by pressing ctrl. or by multiclicking, but it’s a lot more convenient to repeatedly press a single button than to have to precisely time your multiclicks.)

![]() is the single-button way to get ctrl= for entering natural language input:

is the single-button way to get ctrl= for entering natural language input:

✕

|

![]() iconizes your selection:

iconizes your selection:

✕

|

Iconization is something we introduced in Version 11.3, and it’s something that’s proved incredibly useful, particularly for making code easy to read (say by iconizing details of options). (You can also iconize a selection from the right-click menu, or with ctrlcmd'.)

![]() is most relevant for code, and toggles commenting (with

is most relevant for code, and toggles commenting (with ![]() ) a selection.

) a selection. ![]() brings up a palette for math typesetting.

brings up a palette for math typesetting. ![]() lets you enter

lets you enter ![]() that will be converted to Wolfram Language math typesetting.

that will be converted to Wolfram Language math typesetting. ![]() brings up a drawing canvas.

brings up a drawing canvas. ![]() inserts a hyperlink (cmdshiftH).

inserts a hyperlink (cmdshiftH).

If you’re in a text cell, the toolbar will look different, now sporting a text formatting control: ![]()

Most of this is fairly standard. ![]() lets you insert “code voice” material.

lets you insert “code voice” material. ![]() and

and ![]() are still in the toolbar for inserting math into a text cell.

are still in the toolbar for inserting math into a text cell.

On the right-hand end of the toolbar are three more buttons: ![]() .

. ![]() gives you a dialog to publish your notebook to the cloud.

gives you a dialog to publish your notebook to the cloud. ![]() opens documentation, either specifically looking up whatever you have selected in the notebook, or opening the front page (“root guide page”) of the main Wolfram Language documentation. Finally,

opens documentation, either specifically looking up whatever you have selected in the notebook, or opening the front page (“root guide page”) of the main Wolfram Language documentation. Finally, ![]() lets you search in your current notebook.

lets you search in your current notebook.

As I mentioned above, what’s in Version 13.1 is just the first iteration of our default toolbar. Expect more features in later versions. One thing that’s notable about the toolbar in general is that it’s 100% implemented in Wolfram Language. And in addition to adding a great deal of flexibility, this also means that the toolbar immediately works on all platforms. (By the way, if you don’t want the toolbar in a particular notebook—or for all your notebooks—just right-click the background of the toolbar to pick that option.)

Polishing the User Interface

We first introduced Wolfram Notebooks with Version 1.0 of Mathematica, in 1988. And ever since then, we’ve been progressively polishing the notebook interface, doing more with every new version.

The ctrl= mechanism for entering natural language (“Wolfram|Alpha-style”) input debuted in Version 10.0—and in Version 13.1 it’s now accessible from the ![]() button in the new default notebook toolbar. But what actually is

button in the new default notebook toolbar. But what actually is ![]() when it’s in a notebook? In the past, it’s been a fairly complex symbolic structure mainly suitable for evaluation. But in Version 13.1 we’ve made it much simpler. And while that doesn’t have any direct effect if you’re just using

when it’s in a notebook? In the past, it’s been a fairly complex symbolic structure mainly suitable for evaluation. But in Version 13.1 we’ve made it much simpler. And while that doesn’t have any direct effect if you’re just using ![]() purely in a notebook, it does have an effect if you copy

purely in a notebook, it does have an effect if you copy ![]() into another application, like pure-text email. In the past this produced something that would work if pasted back into a notebook, but definitely wasn’t particularly readable. In Version 13.1, it’s now simply the Wolfram Language interpretation of your natural language input:

into another application, like pure-text email. In the past this produced something that would work if pasted back into a notebook, but definitely wasn’t particularly readable. In Version 13.1, it’s now simply the Wolfram Language interpretation of your natural language input:

✕

|

What happens if the computation you do in a notebook generates a huge output? Ever since Version 6.0 we’ve had some form of “output limiter”, but in Version 13.1 it’s become much sleeker and more useful. Here’s a typical example:

✕

|

Talking of big outputs (as well as other things that keep the notebook interface busy), another change in Version 13.1 is the new asynchronous progress overlay on macOS. This doesn’t affect other platforms where this problem had already been solved, but on the Mac changes in the OS had led to a situation where the notebook front end could mysteriously pop to the front on your desktop—a situation that has now been resolved.

One of the slightly unusual user interface features that’s existed ever since Version 1.0 is the Why the Beep? menu item—that lets you get an explanation of any “error beep” that occurs while you’re running the system. The function Beep lets you generate your own beep. And now in Version 13.1 you can use Beep["string"] to set up an explanation of “your beep”, that users can retrieve through the Why the Beep? menu item.

The basic notebook user interface works as much as possible with standard interface elements on all platforms, so that when these elements are updated, we always automatically get the “most modern” look. But there are parts of the notebook interface that are quite special to Wolfram Notebooks and are always custom designed. One that hadn’t been updated for a while is the Preferences dialog—which now in Version 13.1 gets a full makeover:

✕

|

When you tell the Wolfram Language to do something, it normally just goes off and does it, without asking you anything (well, unless it explicitly needs input, needs a password, etc.) But what if there’s something that it might be a good idea to do, though it’s not strictly necessary? What should the user interface for this be? It’s tricky, but I think we now have a good solution that we’ve started deploying in Version 13.1.

In particular, in Version 13.1, there’s an example related to the Wolfram Function Repository. Say you use a function for which an update is available. What now happens is that a blue box is generated that tells you about the update—though it still keeps going with the computation, ignoring the update:

✕

|

If you click the Update Now button in the blue box you can do the update. And then the point is that you can run the computation again (for example, just by pressing shiftenter), and now it’ll use the update. In a sense the core idea is to have an interface where there are potentially multiple passes, and where a computation always runs to completion, but you have an easy way to change how it’s set up, and then run it again.

Large-Scale Code Editing

One of the great things about the Wolfram Language is that it works well for programs of any scale—from less than a line long to millions of lines long. And for the past several years we’ve been working on expanding our support for very large Wolfram Language programs. Using LSP (Language Server Protocol) we’ve provided the capability for most standard external IDEs to automatically do syntax coloring and other customizations for the Wolfram Language.

In Version 13.1 we’re also adding a couple of features that make large-scale code editing in notebooks more convenient. The first—and widely requested—is block indent and outdent of code. Select the lines you want to indent or outdent and simply press tab or shifttab to indent or outdent them:

✕

|

Ever since Version 6.0 we’ve had the ability to work with .wl package files (as well as .wls script files) using our notebook editing system. A new default feature in Version 13.1 is numbering of all code lines that appear in the underlying file (and, yes, we correctly align line numbers accounting for the presence of non-code cells):

✕

|

So now, for example, if you get a syntax error from Get or a related function, you’ll immediately be able to use the line number it reports to find where it occurs in the underlying file.

Scribbling on Notebooks

In Version 12.2 we introduced Canvas as a convenient interface for interactive drawing in notebooks. In Version 13.1 we’re introducing the notion of toggling a canvas on top of any cell.

Given a cell, just select it and press ![]() , and you’ll get a canvas:

, and you’ll get a canvas:

✕

|

Now you can use the drawing tools in the canvas to create an annotation overlay:

✕

|

If you evaluate the cell, the overlay will stay. (You can get rid of the “canvas wrapper” by applying Normal.)

Trees Continue to Grow 🌱🌳

In Version 12.3 we introduced Tree as a new fundamental construct in the Wolfram Language. In Version 13.0 we added a variety of styling options for trees, and in Version 13.1 we’re adding more styling as well as a variety of new fundamental features.

An important update to the fundamental Tree construct in Version 13.1 is the ability to name branches at each node, by giving them in an association:

✕

|

All tree functions now include support for associations:

✕

|

In many uses of trees the labels of nodes are crucial. But particularly in more abstract applications one often wants to deal with unlabeled trees. In Version 13.1 the function UnlabeledTree (roughly analogously to UndirectedGraph) takes a labeled tree, and basically removes all visible labels. Here is a standard labeled tree

✕

|

and here’s the unlabeled analog:

✕

|

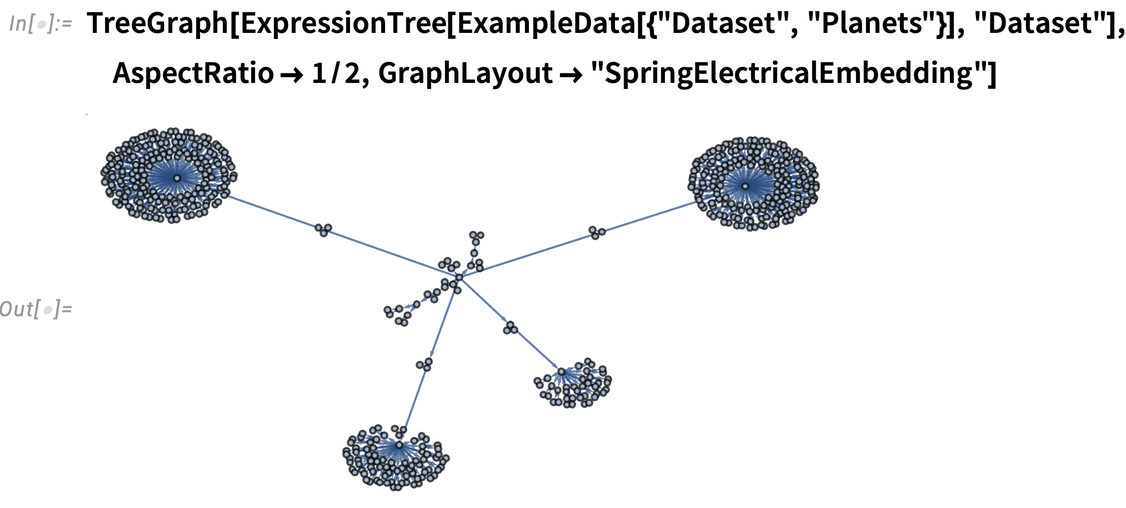

In Version 12.3 we introduced ExpressionTree for deriving trees from general symbolic expressions. Our plan is to have a wide range of “special trees” appropriate for representing different specific kinds of symbolic expressions. We’re beginning this process in Version 13.1 by, for example, having the concept of “Dataset trees”. Here’s ExpressionTree converting a dataset to a tree:

✕

|

And now here’s TreeExpression “inverting” that, and producing a dataset:

✕

|

(Remember the convention that *Tree functions return a tree; while Tree* functions take a tree and return something else.)

Here’s a “graph rendering” of a more complicated dataset tree:

✕

|

The new function TreeLeafCount lets you count the total number of leaf nodes on a tree (basically the analog of LeafCount for a general symbolic expression):

✕

|

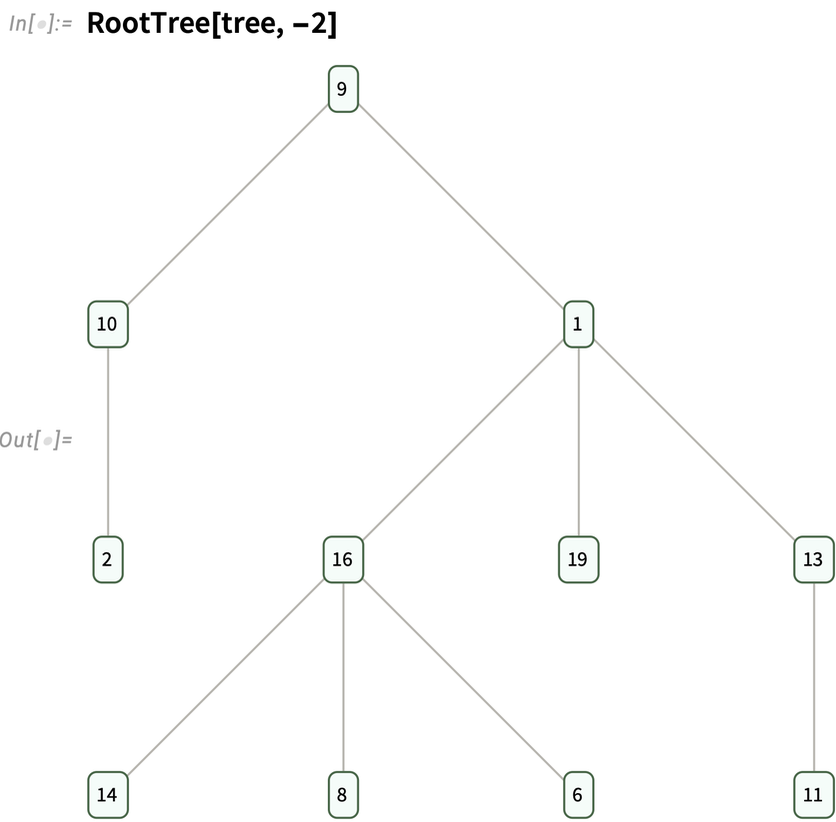

Another new function in Version 13.1 that’s often useful in getting a sense of the structure of a tree without inspecting every node is RootTree. Here’s a random tree:

✕

|

RootTree can get a subtree that’s “close to the root”:

✕

|

It can also get a subtree that’s “far from the leaves”, in this case going down to elements that are at level –2 in the tree:

✕

|

In some ways the styling of trees is like the styling of graphs—though there are some significant differences as a result of the hierarchical nature of trees. By default, options inserted into a particular tree element affect only that tree element:

✕

|

But you can give rules that specify how elements in the subtree below that element are affected:

✕

|

In Version 13.1 there is now detailed control available for styling both nodes and edges in the tree. Here’s an example that gives styling for parent edges of nodes:

✕

|

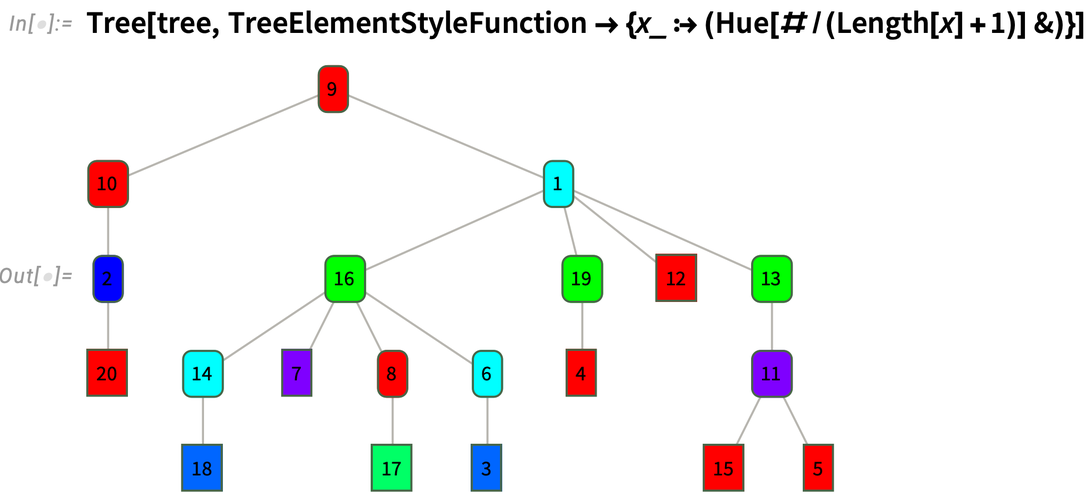

Options like TreeElementStyle determine styling from the positions of elements. TreeElementStyleFunction, on the other hand, determines styling by applying a function to the data at each node:

✕

|

This uses both data and position information for each node:

✕

|

In analogy with VertexShapeFunction for graphs, TreeElementShapeFunction provides a general mechanism to specify how nodes of a tree should be rendered. This named setting for TreeElementShapeFunction makes every node be displayed as a circle:

✕

|

Yet More Date-Handling Details

We first introduced dates into Wolfram Language in Version 2.0, and we introduced modern date objects in Version 10.0. But to really make dates fully computable, there are many detailed cases to consider. And in Version 13.1 we’re dealing with yet another of them. Let’s say you’ve got the date January 31, 2022. What date is one month later—given that there’s no February 31, 2022?

If we define a month “physically”, it corresponds to a certain fractional number of days:

✕

|

And, yes, we can use this to decide what is a month after January 31, 2022:

✕

|

Slightly confusing here is that we’re dealing with date objects of “day” granularity. We can see more if we go down to the level of minutes:

✕

|

If one’s doing something like astronomy, this kind of “physical” date computation is probably what one wants. But if one’s doing everyday “human” activities, it’s almost certainly not what one wants; instead, one wants to land on some calendar date or another.

Here’s the default in the Wolfram Language:

✕

|

But now in Version 13.1 we can parametrize more precisely what we want. This default is what we call "RollBackward": wherever we “land” by doing the raw date computation, we “roll backward” to the first valid date. An alternative is "RollForward":

✕

|

Whatever method one uses, there are going to be weird cases. Let’s say we start with several consecutive dates:

✕

|

With "RollBackward" we have the weirdness of repeating February 28:

✕

|

With "RollForward" we have the weirdness of repeating March 1:

✕

|

Is there any alternative? Yes, we can use "RollOver":

✕

|

This keeps advancing through days, but then has the weirdness that it goes backwards. And, yes, there’s no “right answer” here. But in Version 13.1 you can now specify exactly what you want the behavior to be.

The same issue arises not just for months, but also, for example, for years. And it affects not just DatePlus, but also DateDifference.

It’s worth mentioning that in Version 13.1, in addition to dealing with the detail we’ve just discussed, the whole framework for doing “date arithmetic” in Wolfram Language has been made vastly more efficient, sometimes by factors of hundreds.

Capturing Video & More

We’ve had ImageCapture since Version 8.0 (in 2010) and AudioCapture since Version 11.1 (in 2017). Now in Version 13.1 we have VideoCapture. By default VideoCapture[] gives you a GUI that lets you record from your camera:

✕

|

Clicking the down arrow opens up a preview window that shows your current video:

✕

|

When you’ve finished recording, VideoCapture returns the Video object you created:

|

✕

VideoCapture[] |

Now you can process or analyze this Video object just like you would any other:

✕

|

VideoCapture[] is a blocking operation that waits until you’ve finished recording, then returns a result. But VideoCapture can also be used “indirectly” as a dynamic control. Thus, for example

✕

|

lets you asynchronously start and stop recording, even as you do other things in your Wolfram Language session. But every time you stop recording, the value of video is updated.

VideoCapture records video from your camera (and you can use the ImageDevice option to specify which one if you have several). VideoScreenCapture, on the other hand, records from your computer screen—in effect providing a video analog of CurrentScreenImage.

VideoScreenCapture[], like VideoCapture[], is a blocking operation as far as the Wolfram Language is concerned. But if you want to watch something happening in another application (say, a web browser), it’ll do just fine. And in addition, you can give a screen rectangle to capture a particular region on your screen:

|

✕

VideoScreenCapture[{{0, 50}, {640, 498}}]

|

Then for example you can analyze the time series of RGB color levels in the video that’s produced:

✕

|

What if you want to screen record from a notebook? Well, then you can use the asynchronous dynamic recording mechanism that exists in VideoScreenCapture just as it does in VideoCapture.

By the way, both VideoCapture and VideoScreenCapture by default capture audio. You can switch off audio recording either from the GUI, or with the option AudioInputDevice→None.

If you want to get fancy, you can screen record a notebook in which you are capturing video from your camera (which in turn shows you capturing a video, etc.):

|

✕

VideoScreenCapture[EvaluationNotebook[]] |

In addition to capturing video from real-time goings-on, you can also generate video directly from functions like AnimationVideo and SlideShowVideo—as well as by “touring” an image using TourVideo. In Version 13.1 there are some significant enhancements to TourVideo.

Take an animal scene and extract bounding boxes for elephants and zebras:

✕

|

Now you can make a tour video that visits each animal:

✕

|

|

|

Define a path function of a variable t:

|

✕

|

✕

|

Now we can use the path function to make a “spiralling” tour video:

|

|

|

College Calculus

Transforming college calculus was one of the early achievements of Mathematica. But even now we’re continuing to add functionality to make college calculus ever easier and smoother to do—and more immediately connectable to applications. We’ve always had the function D for taking derivatives at a point. Now in Version 13.1 we’re adding ImplicitD for finding implicit derivatives.

So, for example, it can find the derivative of xy with respect to x, with y determined implicit by the constraint x2 + y2 = 1:

✕

|

Leave out the first argument and you’ll get the standard college calculus “find the slope of the tangent line to a curve”:

✕

|

So far all of this is a fairly straightforward repackaging of our longstanding calculus functionality. And indeed these kinds of implicit derivatives have been available for a long time in Wolfram|Alpha. But for Mathematica and the Wolfram Language we want everything to be as general as possible—and to support the kinds of things that show up in differential geometry, and in things like asymptotics and validation of implicit solutions to differential equations. So in addition to ordinary college-level calculus, ImplicitD can do things like finding a second implicit derivative on a curve defined by the intersection of two surfaces:

✕

|

In Mathematica and the Wolfram Language Integrate is a function that just gets you answers. (In Wolfram|Alpha you can ask for a step-by-step solution too.) But particularly for educational purposes—and sometimes also when pushing boundaries of what’s possible—it can be useful to do integrals in steps. And so in Version 13.1 we’ve added the function IntegrateChangeVariables for changing variables in integrals.

An immediate issue is that when you specify an integral with Integrate[...], Integrate will just go ahead and do the integral:

✕

|

But for IntegrateChangeVariables you need an “undone” integral. And you can get this using Inactive, as in:

✕

|

And given this inactive form, we can use IntegrateChangeVariables to do a “trig substitution”:

✕

|

The result is again an inactive form, now stating the integral differently. Activate goes ahead and actually does the integral:

✕

|

IntegrateChangeVariables can deal with multiple integrals as well—and with named coordinate systems. Here it’s transforming a double integral to polar coordinates:

✕

|

Although the basic “structural” transformation of variables in integrals is quite straightforward, the whole story of IntegrateChangeVariables is considerably more complicated. “College-level” changes of variables are usually carefully arranged to come out easily. But in the more general case, IntegrateChangeVariables ends up having to do nontrivial transformations of geometric regions, difficult simplifications of integrands subject to certain constraints, and so on.

In addition to changing variables in integrals, Version 13.1 also introduces DSolveChangeVariables for changing variables in differential equations. Here it’s transforming the Laplace equation to polar coordinates:

✕

|

Sometimes a change of variables can just be a convenience. But sometimes (think General Relativity) it can lead one to a whole different view of a system. Here, for example, an exponential transformation converts the usual Cauchy–Euler equation to a form with constant coefficients:

✕

|

Fractional Calculus

The first derivative of x2 is 2x; the second derivative is 2. But what is the ![]() derivative? It’s a question that was asked (for example by Leibniz) even in the first years of calculus. And by the 1800s Riemann and Liouville had given an answer—which in Version 13.1 can now be computed by the new FractionalD:

derivative? It’s a question that was asked (for example by Leibniz) even in the first years of calculus. And by the 1800s Riemann and Liouville had given an answer—which in Version 13.1 can now be computed by the new FractionalD:

✕

|

And, yes, do another ![]() derivative and you get back the 1st derivative:

derivative and you get back the 1st derivative:

✕

|

In the more general case we have:

✕

|

And this works even for negative derivatives, so that, for example, the (–1)st derivative is an ordinary integral:

✕

|

It can be at least as difficult to compute a fractional derivative as an integral. But FractionalD can still often do it

✕

|

though the result can quickly become quite complicated:

✕

|

Why is FractionalD a separate function, rather than just being part of a generalization of D? We discussed this for quite a while. And the reason we introduced the explicit FractionalD is that there isn’t a unique definition of fractional derivatives. In fact, in Version 13.1 we also support the Caputo fractional derivative (or differintegral) CaputoD.

For the ![]() derivative of x2, the answer is still the same:

derivative of x2, the answer is still the same:

✕

|

But as soon as a function isn’t zero at x = 0 the answer can be different:

✕

|

CaputoD is a particularly convenient definition of fractional differentiation when one’s dealing with Laplace transforms and differential equations. And in Version 13.1 we can now only compute CaputoD but also do integral transforms and solve equations that involve it.

Here’s a ![]() -order differential equation

-order differential equation

✕

|

and a ![]() -order one

-order one

✕

|

as well as a πth-order one:

✕

|

Note the appearance of MittagLefflerE. This function (which we introduced in Version 9.0) plays the same kind of role for fractional derivatives that Exp plays for ordinary derivatives.

More Math—Some Long Awaited

In February 1990 an internal bug report was filed against the still-in-development Version 2.0 of Mathematica:

✕

|

It’s taken a long time (and similar issues have been reported many times), but in Version 13.1 we can finally close this bug!

Consider the differential equation (the Clairaut equation):

✕

|

What DSolve does by default is to give the generic solution to this equation, in terms of the parameter 𝕔1. But the subtle point (which in optics is associated with caustics) is that the family of solutions for different values of 𝕔1 has an envelope which isn’t itself part of the family of solutions, but is also a solution:

✕

|

In Version 13.1 you can request that solution with the option IncludeSingularSolutions→True:

✕

|

And here’s a plot of it:

✕

|

DSolve was a new function (back in 1991) in Version 2.0. Another new function in Version 2.0 was Residue. And in Version 13.1 we’re also adding an extension to Residue: the function ResidueSum. And while Residue finds the residue of a complex function at a specific point, ResidueSum finds a sum of residues.

This computes the sum of all residues for a function, across the whole complex plane:

✕

|

This computes the sum of residues within a particular region, in this case the unit disk:

✕

|

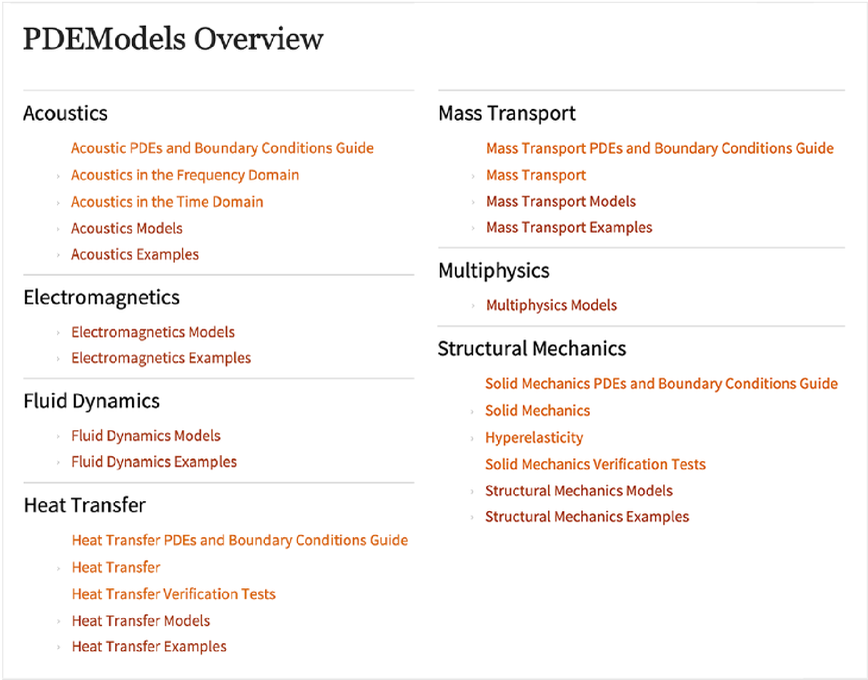

Create Your Own “Guide to Functions” Pages

An important part of the built-in documentation for the Wolfram Language are what we call “guide pages”—pages like the following that organize functions (and other constructs) to give an overall “cognitive map” and summary of some area:

✕

|

In Version 13.1 it’s now easy to create your own custom guide pages. You can list built-in functions or other constructs, as well as things from the Wolfram Function Repository and other repositories.

Go to the “root page” of the Documentation Center and press the icon:

✕

|

You’ll get a blank custom guide page:

✕

|

Fill in the guide page however you want, then use Deploy to deploy the page either locally, or to your cloud account. Either way, the page will now show up in the menu from the top of the root guide page (and they’ll also show up in search):

✕

|

You might end up creating just one custom guide page for your favorite functions. Or you might create several, say one for each task or topic you commonly deal with. Guide pages aren’t about putting in the effort to create full-scale documentation; they’re much more lightweight, and aimed more at providing quick (“what was that function called?”) reminders and “big-picture” maps—leveraging all the specific function and other documentation that already exists.

Visual Effects & Beautification

At first it seemed like a minor feature. But once we’d implemented it, we realized it was much more useful than we’d expected. Just as you can style a graphics object with its color (and, as of Version 13.0, its filling pattern), now in Version 13.1 you can style it with its drop shadowing:

✕

|

Drop shadowing turns out to be a nice way to “bring graphics to life”

✕

|

or to emphasize one element over others:

✕

|

It works well in geo graphics as well:

|

DropShadowing allows detailed control over the shadows: what direction they’re in, how blurred they are and what color they are:

✕

|

Drop shadowing is more complicated “under the hood” than one might imagine. And when possible it actually works using hardware GPU pixel shaders—the same technology that we’ve used since Version 12.3 to implement material-based surface textures for 3D graphics. In Version 13.1 we’ve explicitly exposed some well-known underlying types of 3D shading. Here’s a geodesic polyhedron (yes, that’s another new function in Version 13.1), with its surface normals added (using the again new function EstimatedPointNormals):

✕

|

Here’s the most basic form of shading: flat shading of each facet (and the specularity in this case doesn’t “catch” any facets):

✕

|

Here now is Gouraud shading, with a somewhat-faceted glint:

✕

|

And then there’s Phong shading, looking somewhat more natural for a sphere:

✕

|

Ever since Version 1.0, we’ve had an interactive way to rotate—and zoom into—3D graphics. (Yes, the mechanism was a bit primitive 34 years ago, but it rapidly got to more or less its modern form.) But in Version 13.1 we’re adding something new: the ability to “dolly” into a 3D graphic, imitating what would happen if you actually walked into a physical version of the graphic, as opposed to just zooming your camera:

✕

|

And, yes, things can get a bit surreal (or “treky”)—here dollying in and then zooming out:

3D Voronoi!

There are some capabilities that—over the course of years—have been requested over and over again. In the past these have included infinite undo, high dpi displays, multiple axis plots, and others. And I’m happy to say that most of these have now been taken care of. But there’s one—seemingly obscure—“straggler” that I’ve heard about for well over 25 years, and that I’ve actually also wanted myself quite a few times: 3D Voronoi diagrams. Well, in Version 13.1, they’re here.

Set up 25 random points in 3D:

|

✕

|

✕

|

Now make a Voronoi mesh for these points:

✕

|

To “see inside” we can use opacity:

✕

|

Why was this so hard? In a Voronoi there’s a cell that surrounds each original point, and includes everywhere that’s closer to that point than to any other. We’ve had 2D Voronoi meshes for a long time:

✕

|

But there’s something easier about the 2D case. The issue is not so much the algorithm for generating the cells as it is how the cells can be represented in such a way that they’re useful for subsequent computations. In the 2D case each cell is just a polygon.

But in the 3D case the cells are polyhedra, and to make a Voronoi mesh we have to have a polyhedral mesh where all the polyhedra fit together. And it’s taken us many years to build the large tower of computational geometry necessary to support this. There’s a somewhat simpler case based purely on cells that are always either simplices or hexahedra—that we’ve used for finite-element solutions to PDEs for a while. But in a true 3D Voronoi that’s not enough: the cells can be any (convex) polyhedral shape.

Here are the “puzzle piece” cells for the 3D Voronoi mesh we made above:

✕

|

Reconstructing Geometry from Point Clouds

Pick 500 random points inside an annulus:

|

✕

|

✕

|

Version 13.1 now has a general function reconstructing geometry from a cloud of points:

✕

|

(Of course, given only a finite number of points, the reconstruction can’t be expected to be perfect.)

The function also works in 3D:

✕

|

✕

|

ReconstructionMesh is a general superfunction that uses a variety of methods, including extended versions of the functions ConcaveHullMesh and GradientFittedMesh that were introduced in Version 13.0. And in addition to reconstructing “solid objects”, it can also reconstruct lower-dimensional things like curves and surfaces:

✕

|

A related function new in Version 13.1 is EstimatedPointNormals, which reconstructs not the geometry itself, but normal vectors to each element in the geometry:

✕

|

New in Visualization

In every new version for the past 30 years we’ve steadily expanded our visualization capabilities, and Version 13.1 is no exception. One function we’ve added is TernaryListPlot—an analog of ListPlot that conveniently plots triples of values where what one’s trying to emphasize is their ratios. For example let’s plot data from our knowledgebase on the sources of electricity for different countries:

✕

|

The plot shows the “energy mixture” for different countries, with the ones on the bottom axis being those with zero nuclear. Inserting colors for each axis, along with grid lines, helps explain how to read the plot:

✕

|

Most of the time plots are plotting numbers, or at least quantities. In Version 13.0, we extended functions like ListPlot to also accept dates. In Version 13.1 we’re going much further, and introducing the possibility of plotting what amount to purely symbolic values.

Let’s say our data consists of letters A through C:

✕

|

How do we plot these? In Version 13.1 we just specify an ordinal scale:

✕

|

OrdinalScale lets you specify that certain symbolic values are to be treated as if they are in a specified order. There’s also the concept of a nominal scale—represented by NominalScale—in which different symbolic values correspond to different “categories”, but in no particular order.

Representing Amounts of Chemicals

Molecule lets one symbolically represent a molecule. Quantity lets one symbolically represent a quantity with units. In Version 13.1 we now have the new construct ChemicalInstance that’s in effect a merger of these, allowing one to represent a certain quantity of a certain chemical.

This gives a symbolic representation of 1 liter of acetone (by default at standard temperature and pressure):

✕

|

We can ask what the mass of this instance of this chemical is:

✕

|

ChemicalConvert lets us do a conversion returning particular units:

✕

|

Here’s instead a conversion to moles:

✕

|

This directly gives the amount of substance that 1 liter of acetone corresponds to:

✕

|

This generates a sequence of straight-chain hydrocarbons:

✕

|

Here’s the amount of substance corresponding to 1 g of each of these chemicals:

✕

|

ChemicalInstance lets you specify not just the amount of a substance, but also its state, in particular temperature and pressure. Here we’re converting 1 kg of water at 4° C to be represented in terms of volume:

✕

|

Chemistry as Rule Application: Symbolic Pattern Reactions

At the core of the Wolfram Language is the abstract idea of applying transformations to symbolic expressions. And at some level one can view chemistry and chemical reactions as a physical instantiation of this idea, where one’s not dealing with abstract symbolic constructs, but instead with actual molecules and atoms.

In Version 13.1 we’re introducing PatternReaction as a symbolic representation for classes of chemical reactions—in effect providing an analog for chemistry of Rule for general symbolic expressions.

Here’s an example of a “pattern reaction”:

✕

|

The first argument specifies a pair of “reactant” molecule patterns to be transformed into “product” molecule patterns. The second argument specifies which atoms in which reactant molecules map to which atoms in which product molecules. If you mouse over the resulting pattern reaction, you’ll see corresponding atoms “light up”:

✕

|

Given a pattern reaction, we can use ApplyReaction to apply the reaction to concrete molecules:

✕

|

Here are plots of the resulting product molecules:

✕

|

The molecule patterns in the pattern reaction are matched against subparts of the concrete molecules, then the transformation is done, leaving the other parts of the molecules unchanged. In a sense it’s the direct analog of something like

✕

|

where the b in the symbolic expression is replaced, and the result is “knitted back” to fill in where the b used to be.

You can do what amounts to various kinds of “chemical functional programming” with ApplyReaction and PatternReaction. Here’s an example where we’re essentially building up a polymer by successive nesting of a reaction:

✕

|

✕

|

It’s often convenient to build pattern reactions symbolically using Wolfram Language “chemical primitives”. But PatternReaction also lets you specify reactions as SMARTS strings:

✕

|

PDEs for Rods, Rubber and More

It’s been a 25-year journey, steadily increasing our built-in PDE capabilities. And in Version 13.1 we’ve added several (admittedly somewhat technical) features that have been much requested, and are important for solving particular kinds of real-world PDE problems. The first feature is being able to set up a PDE as axisymmetric. Normally a 2D diffusion term would be assumed Cartesian:

✕

|

But now you can say you’re dealing with an axisymmetric system, with your coordinates being interpreted as radius and height, and everything assumed to be symmetrical in the azimuthal direction:

✕

|

What’s important about this is not just that it makes it easy to set up certain kinds of equations, but also that in solving equations axial symmetry can be assumed, allowing much more efficient methods to be used:

✕

|

Also in Version 13.1 is an extension to the solid mechanics modeling framework introduced in Version 13.0. Just as there’s viscosity that damps out motion in fluids, so there’s a similar phenomenon that damps out motion in solids. It’s more of an engineering story, and it’s usually described in terms of two parameters: mass damping and stiffness damping. And now in Version 13.1 we support this kind of so-called Rayleigh damping in our modeling framework.

Another phenomenon included in Version 13.1 is hyperelasticity. If you bend something like metal beyond a certain point (but not so far that it breaks), it’ll stay bent. But materials like rubber and foam (and some biological tissues) can “bounce back” from basically any deformation.

Let’s imagine that we have a square of rubber-like material. We anchor it on the left, and then we pull it on the right with a certain force. What does it do?

This defines the properties of our material:

|

✕

|

We define variables for the problem, representing x and y displacements by u and v:

|

✕

|

Now we can set up our whole problem, and solve the PDEs for it for each value of the force:

✕

|

|

✕

|

Then one can plot the results, and see the rubber being nonlinearly stretched:

✕

|

There’s in the end considerable depth in our handling of PDE-based modeling, and our increasing ability to do “multiphysics” computations that span multiple types of physics (mechanical, thermal, electromagnetic, acoustic, …). And by now we’ve got nearly 1000 pages of documentation purely about PDE-based modeling. And for example in Version 13.1 we’ve added a monograph specifically about hyperelasticity, as well as expanded our collection of documented PDE models:

Interpretable Machine Learning

Let’s say you have trained a machine learning model and you apply it to a particular input. It gives you some result. But why? What were the important features in the input that led it to that result? In Version 13.1 we’re introducing several functions that try to answer such questions.

Here’s some simple “training data”:

✕

|

We can use machine learning to make a predictor for this data:

✕

|

Applying the predictor to a particular input gives us a prediction:

✕

|

What was important in making this prediction? The "SHAPValues" property introduced in Version 12.3 tells us what contribution each feature made to the result; in this case v was more important than u in determining the value of the prediction:

✕

|

But what about in general, for all inputs? The new function FeatureImpactPlot gives a visual representation of the contribution or “impact” of each feature in each input on the output of the predictor:

✕

|

What does this plot mean? It’s basically showing how often there are what contributions from values of the two input features. And with this particular predictor we see that there’s a wide range of contributions from both features.

If we use a different method to create the predictor, the results can be quite different. Here we’re using linear regression, and it turns out that with this method v never has much impact on predictions:

✕

|

If we make a predictor using a decision tree, the feature impact plot shows the splitting of impact corresponding to different branches of the tree:

✕

|

FeatureImpactPlot gives a kind of bird’s-eye view of the impact of different features. FeatureValueImpactPlot gives more detail, showing as a function of the actual values of input features the impact points with those values would have on the final prediction (and, yes, the actual points plotted here are based on data simulated on the basis of the distribution inferred by the predictor; the actual data is usually too big to want to carry around, at least by default):

✕

|

CumulativeFeatureImpactPlot gives a visual representation of how “successive” features affect the final value for each (simulated) data point:

✕

|

For predictors, feature impact plots show impact on predicted values. For classifiers, they show impact on (log) probabilities for particular outcomes.

Model Predictive Control

One area that leverages many algorithmic capabilities of the Wolfram Language is control systems. We first started developing control systems functionality more than 25 years ago, and by Version 8.0 ten years ago we started to have built-in functions like StateSpaceModel and BodePlot specifically for working with control systems.

Over the past decade we’ve progressively been adding more built-in control systems capabilities, and in Version 13.1 we’re now introducing model predictive controllers (MPCs). Many simple control systems (like PID controllers) take an ad hoc approach in which they effectively just “watch what a system does” without trying to have a specific model for what’s going on inside the system. Model predictive control is about having a specific model for a system, and then deriving an optimal controller based on that model.

For example, we could have a state-space model for a system:

✕

|

Then in Version 13.1 we can derive (using our parametric optimization capabilities) an optimal controller that minimizes a certain set of costs while satisfying particular constraints:

✕

|

The SystemsModelControllerData that we get here contains a variety of elements that allow us to automate the control design and analysis workflow. As an example, we can get a model that represents the controller running in a closed loop with the system it is controlling:

✕

|

Now let’s imagine that we drive this whole system with the input:

✕

|

Now we can compute the output response for the system, and we see that both output variables are driven to zero through the operation of the controller:

✕

|

Within the SystemsModelControllerData object generated by ModelPredictiveController is the actual controller computed in this case—using the new construct DiscreteInputOutputModel:

✕

|

What actually is this controller? Ultimately it’s a collection of piecewise functions that depends on the values of states x1[t] and x2[t]:

✕

|

And this shows the different state-space regions in which the controller has:

✕

|

Algorithmic and Randomized Quizzes

In Version 13.0 we introduced our question and assessment framework that allows you to author things like quizzes in notebooks, together with assessment functions, then deploy these for use. In Version 13.1 we’re adding capabilities to let you algorithmically or randomly generate questions.

The two new functions QuestionGenerator and QuestionSelector let you specify questions to be generated according to a template, or randomly selected from a pool. You can either use these functions directly in pure Wolfram Language code, or you can use them through the Question Notebook authoring GUI.

When you select Insert Question in the GUI, you now get a choice between Fixed Question, Randomized Question and Generated Question:

✕

|

Pick Randomized Question and you’ll get

✕

|

which then allows you to enter questions, and eventually produce a QuestionSelector—which will select newly randomized questions for every copy of the quiz that’s produced:

✕

|

Version 13.1 also introduces some enhancements for authoring questions. An example is a pure-GUI “no-code” way to specify multiple-choice questions:

✕

|

The ExprStruct Data Structure

In the Wolfram Language expressions normally have two aspects: they have a structure, and they have a meaning. Thus, for example, Plus[1,1] has both a definite tree structure

✕

|

and has a value:

✕

|

In the normal operation of the Wolfram Language, the evaluator is automatically applied to all expressions, and essentially the only way to avoid evaluation by the evaluator is to insert “wrappers” like Hold and Inactive that necessarily change the structure of expressions.

In Version 13.1, however, there’s a new way to handle “unevaluated” expressions: the "ExprStruct" data structure. ExprStructs represent expressions as raw data structures that are never directly seen by the evaluator, but can nevertheless be structurally manipulated.

This creates an ExprStruct corresponding to the expression {1,2,3,4}:

✕

|

This structurally wraps Total around the list, but does no evaluation:

✕

|

One can also see this by “visualizing” the data structure:

✕

|

Normal takes an ExprStruct object and converts it to a normal expression, to which the evaluator is automatically applied:

✕

|

One can do a variety of essentially structural operations directly on an ExprStruct. This applies Plus, then maps Factorial over the resulting ExprStruct:

✕

|

The result is an ExprStruct representing an unevaluated expression:

✕

|

With "MapImmediateEvaluate" there is an evaluation done each time the mapping operation generates an expression:

✕

|

One powerful use of ExprStruct is in doing code transformations. And in a typical case one might want to import expressions from, say, a .wl file, then manipulate them in ExprStruct form. In Version 13.1 Import now supports an ExprStructs import element:

✕

|

This selects expressions that correspond to definitions, in the sense that they have SetDelayed as their head:

✕

|

Here’s a visualization of the first one:

✕

|

Super-Efficient Compiler-Based External Code Interaction

Let’s say you’ve got external code that’s in a compiled C-compatible dynamic library. An important new capability in Version 13.1 is a super-efficient and very streamlined way to call any function in a dynamic library directly from within the Wolfram Language.

It’s one of the accelerating stream of developments that are being made possible by the large-scale infrastructure build-out that we’ve been doing in connection with the new Wolfram Language compiler—and in particular it often leverages our sophisticated new type-handling capabilities.

As a first example, let’s consider the RAND_bytes (“cryptographically secure pseudorandom number generator”) function in OpenSSL. The C declaration for this function is:

![]()

In Version 13.1 we now have a symbolic way to represent such a declaration directly in the Wolfram Language:

✕

|

(In general we’d also have to specify the library that this function is coming from. OpenSSL happens to be a library that’s loaded by default with the Wolfram Language so you don’t need to mention it.)

There are quite a few new things going on in the declaration. First, as part of our collection of compiled types, we’re adding ones like "CInt" and "CChar" that refer to raw C language types (here int and char). There’s also CArray which is for declaring C arrays. Notice the new ::[ ... ] syntax for TypeSpecifier that allows compact specifications for parametrized types, like the char* here, that is described in Wolfram Language as "CArray"::["CChar"].

Having set up the declaration, we now need to create an actual function that can take an argument from Wolfram Language, convert it to something suitable for the library function, then call the library function, and convert the result back to Wolfram Language form. Here’s a way to do that in this case:

✕

|

What we get back is a compiled code function that we can directly use, and that works by very efficiently calling the library function:

✕

|

The FunctionCompile above uses several constructs that are new in Version 13.1. What it fundamentally does is to take a Wolfram Language integer (which it assumes to be a machine integer), cast it into a C integer, then pass this to the library function, along with a specification of a C char * into which the library function will put its result, and from which the final Wolfram Language result will be retrieved.

It’s worth emphasizing that most of the complexity here has to do with handling data types and conversions between them—something that the Wolfram Language goes to a lot of trouble to avoid usually exposing the user to. But when we’re connecting to external languages that make fundamental use of types, there’s no choice but to deal with them, and the complexity they involve.

In the FunctionCompile above the first new construct we encounter is

|

✕

|

The basic purpose of this is to create the buffer into which the external function will write its results. The buffer is an array of bytes, declared in C as char *, or here as "CArray"::["CChar"]. There’s an actual wrinkle though: who’s going to manage the memory associated with this array? The "Managed":: type specifier says that the Wolfram Language wrapper will do memory management for this object.

The next new construct we see in the FunctionCompile is

|

✕

|

Cast is one of a family of new functions that can appear in compilable code, but have no significance outside the compiler. Cast is used to specify that data should be converted to a form consistent with a specified type (here a C int type).

The core of the FunctionCompile is the use of LibraryFunction, which is what actually calls the external library function that we declared with the library function declaration.

The last step in the function compiled by FunctionCompile is to extract data from the C array and return it as a Wolfram Language list. To do this requires the new function FromRawPointer, which actually retrieves data from a specified location in memory. (And, yes, this is a raw dereferencing operation that will cause a crash if it isn’t done correctly.)

All of this may at first seem rather complicated, but for what it’s doing, it’s remarkably simple—and greatly leverages the whole symbolic structure of the Wolfram Language. It’s also worth realizing that in this particular example, we’re just dipping into compiled code and then returning results. In larger-scale cases we’d be doing many more operations—typically specified directly by top-level Wolfram Language code—within compiled code, and so type declaration and conversion operations would be a smaller fraction of the code we have to write.

One feature of the example we’ve just looked at is that it only uses built-in types. But in Version 13.1 it’s now possible to define custom types, such as the analog of C structs. As an example, consider the function ldiv from the C standard library. This function returns an object of type ldiv_t, defined by the following typedef:

Here’s the Wolfram Language version of this declaration, based on setting up a "Product" type named "CLDivT":

|

✕

|

(The "ReferenceSemantics"→False option specifies that this type will actually be passed around as a value, rather than just a pointer to a value.)

Now the declaration for the ldiv function can use this new custom type:

|

✕

|

The final definition of the call to the external ldiv function is then:

✕

|

And now we can use the function (and, yes, it will be as efficient as if we’d directly written everything in C):

✕

|

The examples we’ve given here are very small ones. But the whole structure for external function calls that’s now in Version 13.1 is set up to handle large and complex situations—and indeed we’ve been using it internally with great success to set up important new built-in pieces of the Wolfram Language.

One of the elements that’s often needed in more complex situations is more sophisticated memory management, and our new "Managed" type provides a convenient and streamlined way to do this.

This makes a compiled function that creates an array of 10,000 machine integers:

✕

|

Running the function effectively “leaks” memory:

✕

|

But now define a version of the function in which the array is “managed”:

✕

|

Now the memory associated with the array is automatically freed when it is no longer referenced:

✕

|

Directly Compiling Function Definitions

If you have an explicit pure function (Function[...]) you can use FunctionCompile to produce a compiled version of it. But what if you have a function that’s defined using downvalues, as in:

|

✕

|

In Version 13.1 you can directly compile function definitions like this. But—as is the nature of compilation—you have declare what types are involved. Here is a declaration for the function fac that says it takes a single machine integer, and returns a machine integer:

|

✕

|

Now we can create a compiled function that computes fac[n]:

✕

|

The compiled function runs significantly faster than the ordinary symbolic definition:

✕

|

✕

|

The ability to declare and use downvalue definitions in compilation has the important feature that it allows you to write a definition just once, and then use it both directly, and in compiled code.

Manipulating Expressions in Compiled Code

An early focus of the Wolfram Language compiler is handling low-level “machine” types, such as integers or reals of certain lengths. But one of the advances in the Version 13.1 compiler is direct support for an "InertExpression" type for representing any Wolfram Language expression within compiled code.

When you use something like FunctionCompile, it will explicitly try to compile whatever Wolfram Language expressions it’s given. But if you wrap the expressions with InertExpression the compiler will then just treat the expressions as inert structural objects of type "InertExpression". This sets up a compiled function that constructs an expression (implicitly of type "InertExpression"):

✕

|

Evaluating the function constructs and then returns the expression:

✕

|

Normally, within the compiler, an "InertExpression" object will be treated in a purely structural way, without any evaluation (and, yes, it’s closely related to the "ExprStruct" data structure). But sometimes it’s useful to perform evaluation on it, and you can do this with InertEvaluate:

✕

|

Now the InertEvaluate does the evaluation before wrapping Hold around the inert expression:

✕

|

The ability to handle expressions directly in the compiler might seem like some kind of detail. But it’s actually hugely important in opening up possibilities for future development with the Wolfram Language. For the past 35 years, we’ve internally been able to write low-level expression manipulation code as part of the C language core of the Wolfram Language kernel. But the ability of the Wolfram Language compiler to handle expressions now opens this up—and lets anyone write maximally efficient code for manipulating expressions that interoperate with everything else in the Wolfram Language.

And Still More…

Even beyond all the things I’ve discussed so far, there are all sorts of further additions and enhancements in Version 13.1, dotted throughout the system.

InfiniteLineThrough and CircularArcThrough have been added for geometric computation, and geometric scene specification. Geometric scenes can now be styled for custom presentation:

✕

|

There are new graph functions: GraphProduct, GraphSum and GraphJoin:

✕

|

And there are new built-in families of graphs: TorusGraph and BuckyballGraph:

✕

|

You can mix images directly into Graphics (and Graphics3D):

✕

|

AbsoluteOptions now resolves many more options in Graphics, telling you what explicit value was used when you gave an option just as Automatic.

The function LeafCount now has a Heads option, to count expression branches inside heads. Splice works with any head, not just List. Functions like IntersectingQ now have SameTest options. You can specify TimeZone options using geographic entities (like cities).

FindClusters now lets you specify exactly how many clusters you want to partition your data into, as well as supporting UpTo[n].